Stability AI

AIOpen SourceProvider of multimodal generative models and production-ready media generation and editing tools for image, audio, video, 3D and language.

Stability AI

Provider of multimodal generative models and production-ready media generation and editing tools for image, audio, video, 3D and language.

About Stability AI

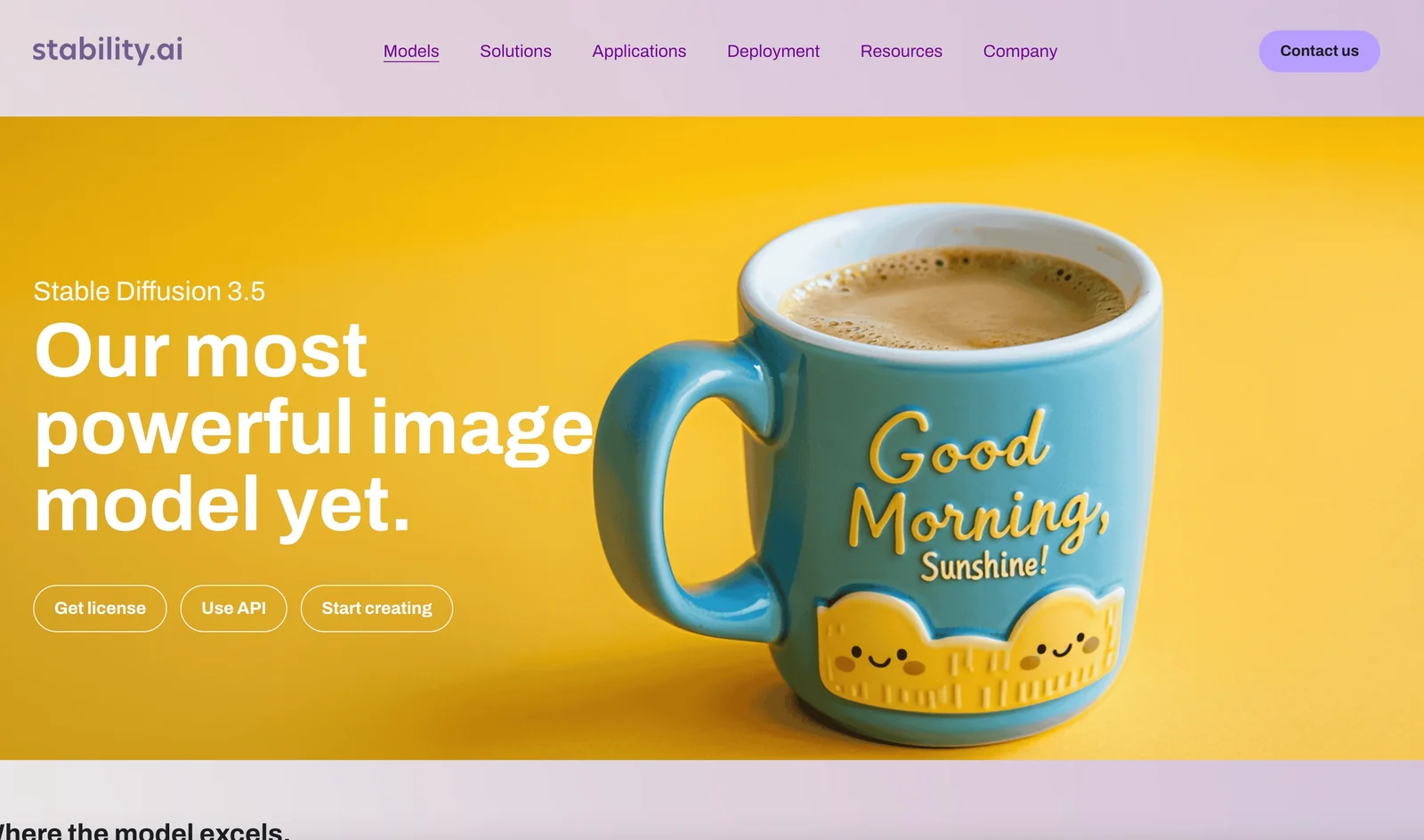

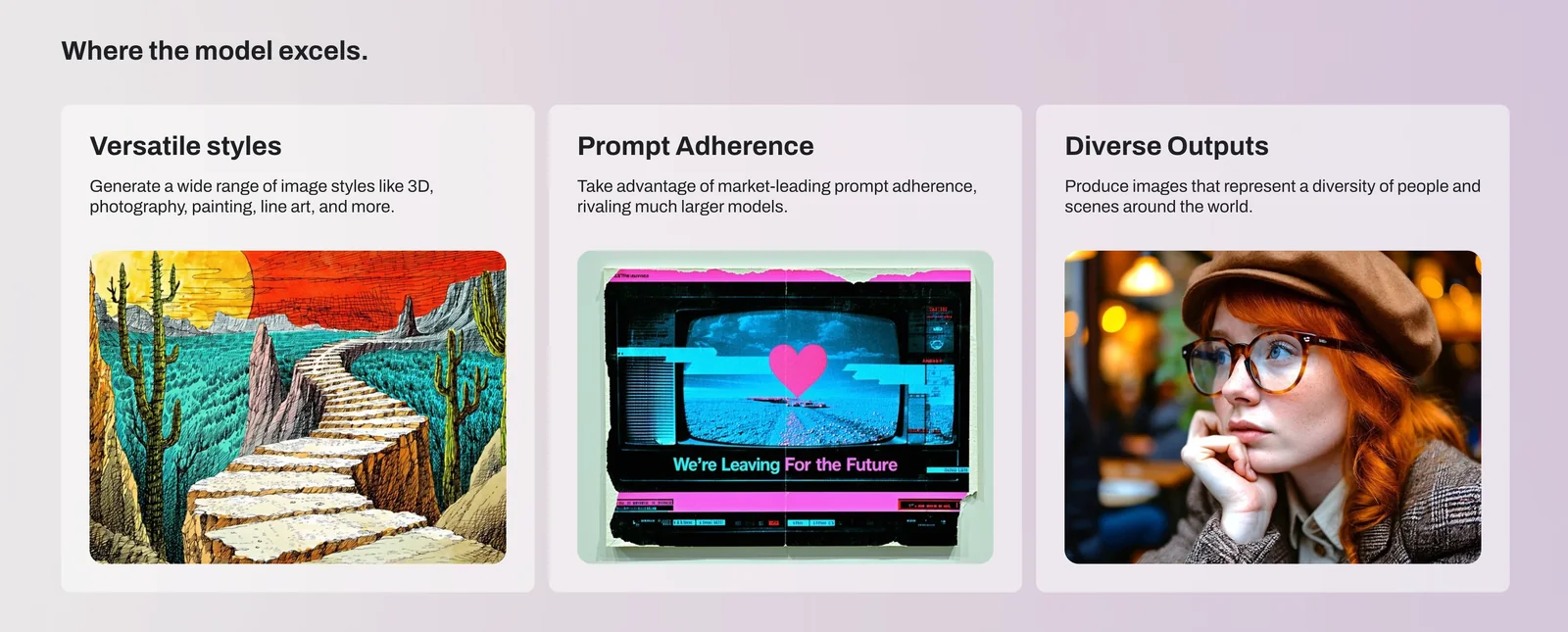

Stability AI builds and distributes multimodal generative models and developer tools for creating and editing images, audio, video, 3D assets and language outputs. The company maintains open-source model repositories (e.g., Stable Diffusion, StableLM, audio and 3D models), optimized model variants for different hardware, and a developer platform and tooling aimed at enterprise production use. Stability AI’s value comes from offering high-quality model weights, community-driven research, GPU-optimized variants, permissive research licensing for many models, and integrations and SDKs that enable teams to embed generative capabilities into applications and workflows.

Screenshots