LTX-2

AIOpen SourceFreeDiT-based audio‑video foundation model delivering synchronized high-fidelity video and audio with production-ready pipelines and LoRA trainer.

LTX-2

DiT-based audio‑video foundation model delivering synchronized high-fidelity video and audio with production-ready pipelines and LoRA trainer.

About LTX-2

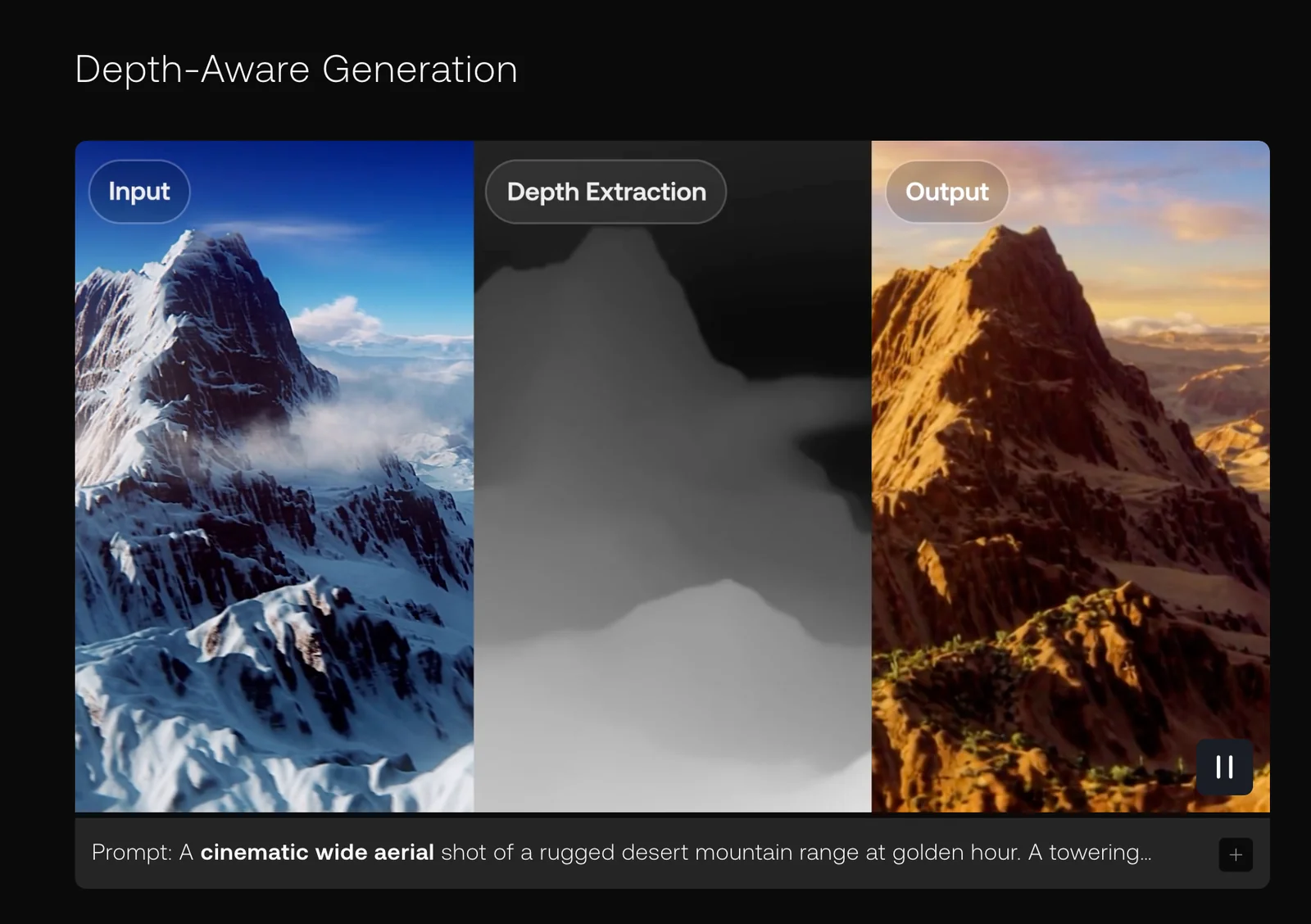

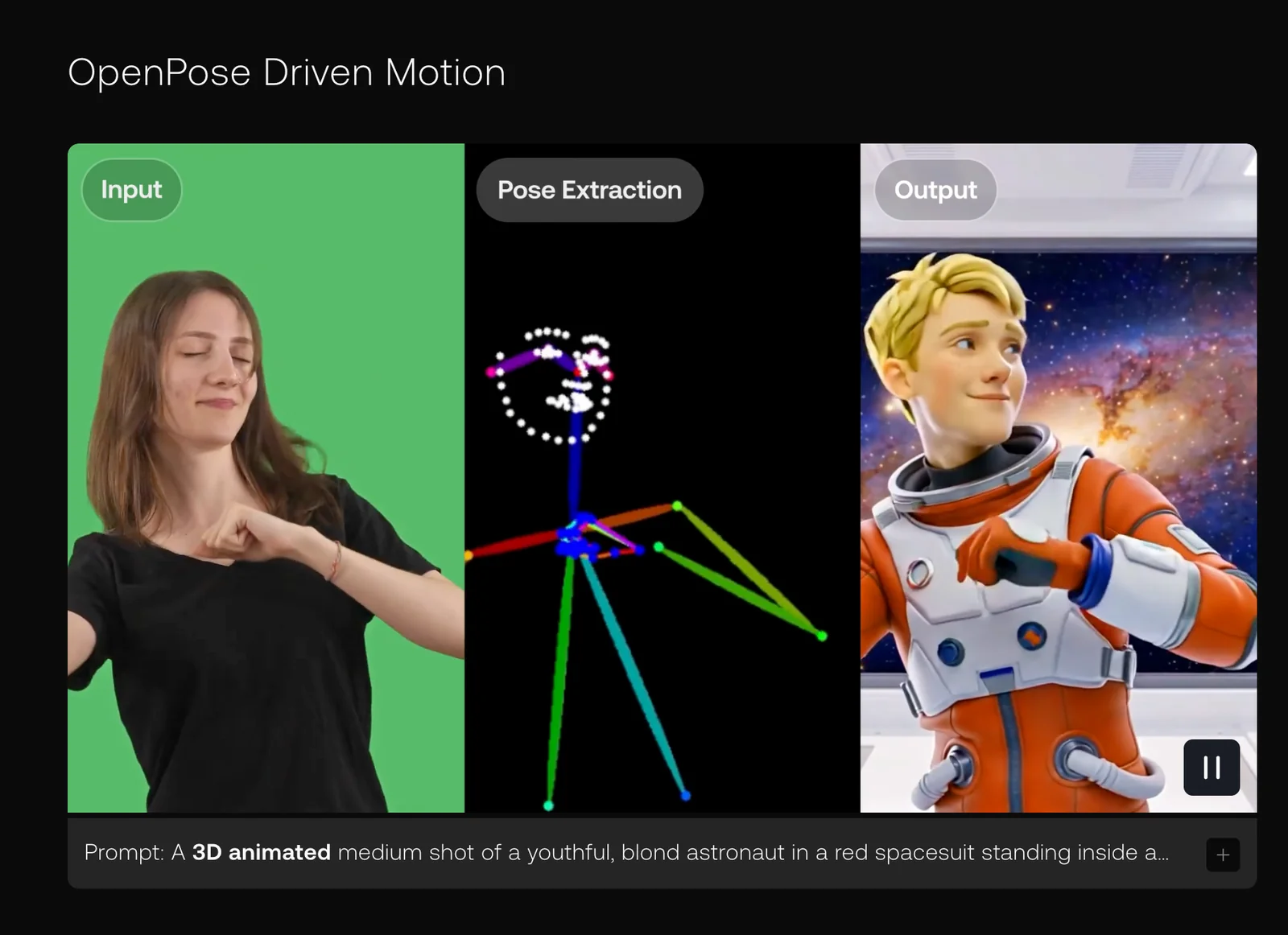

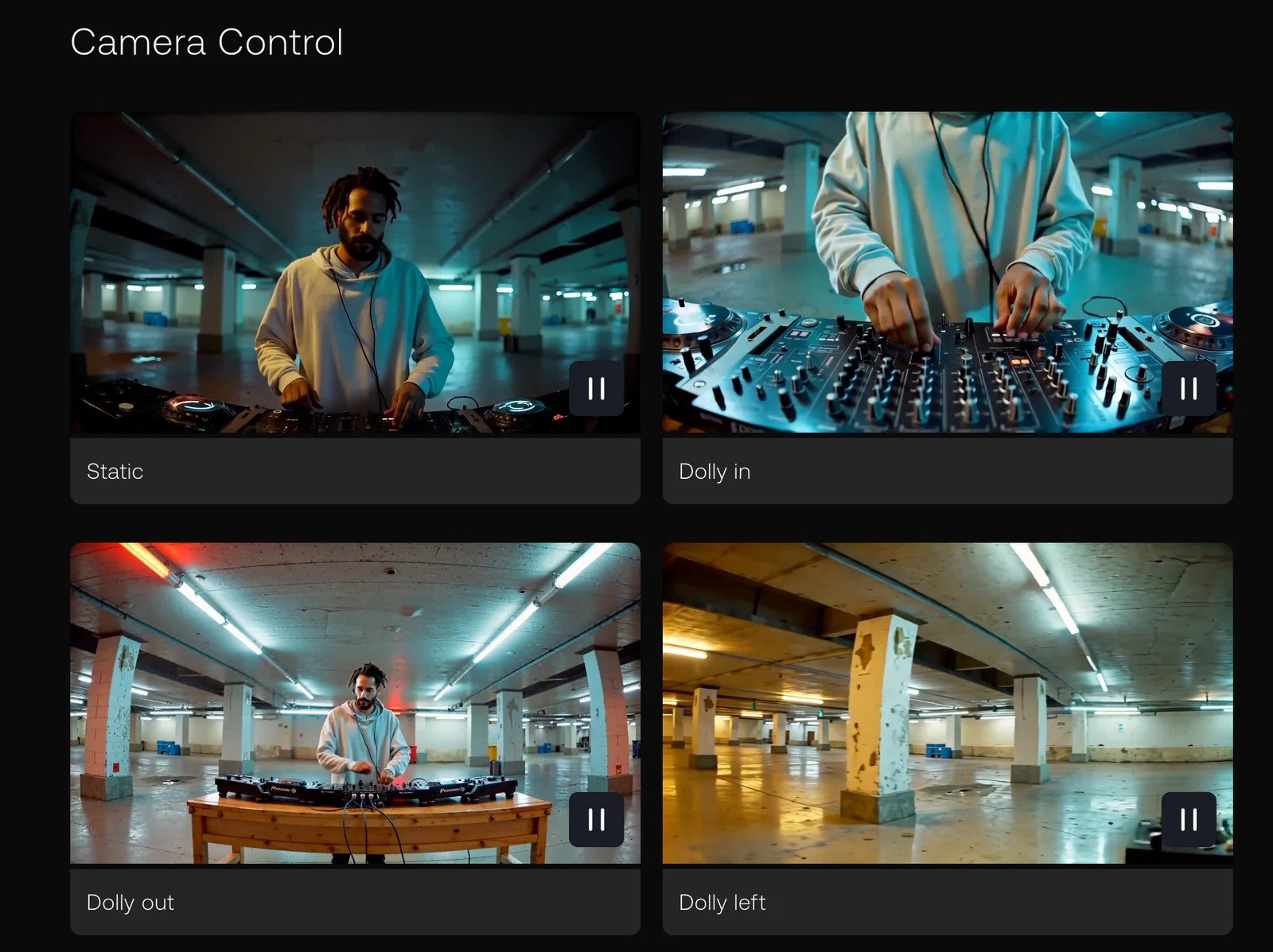

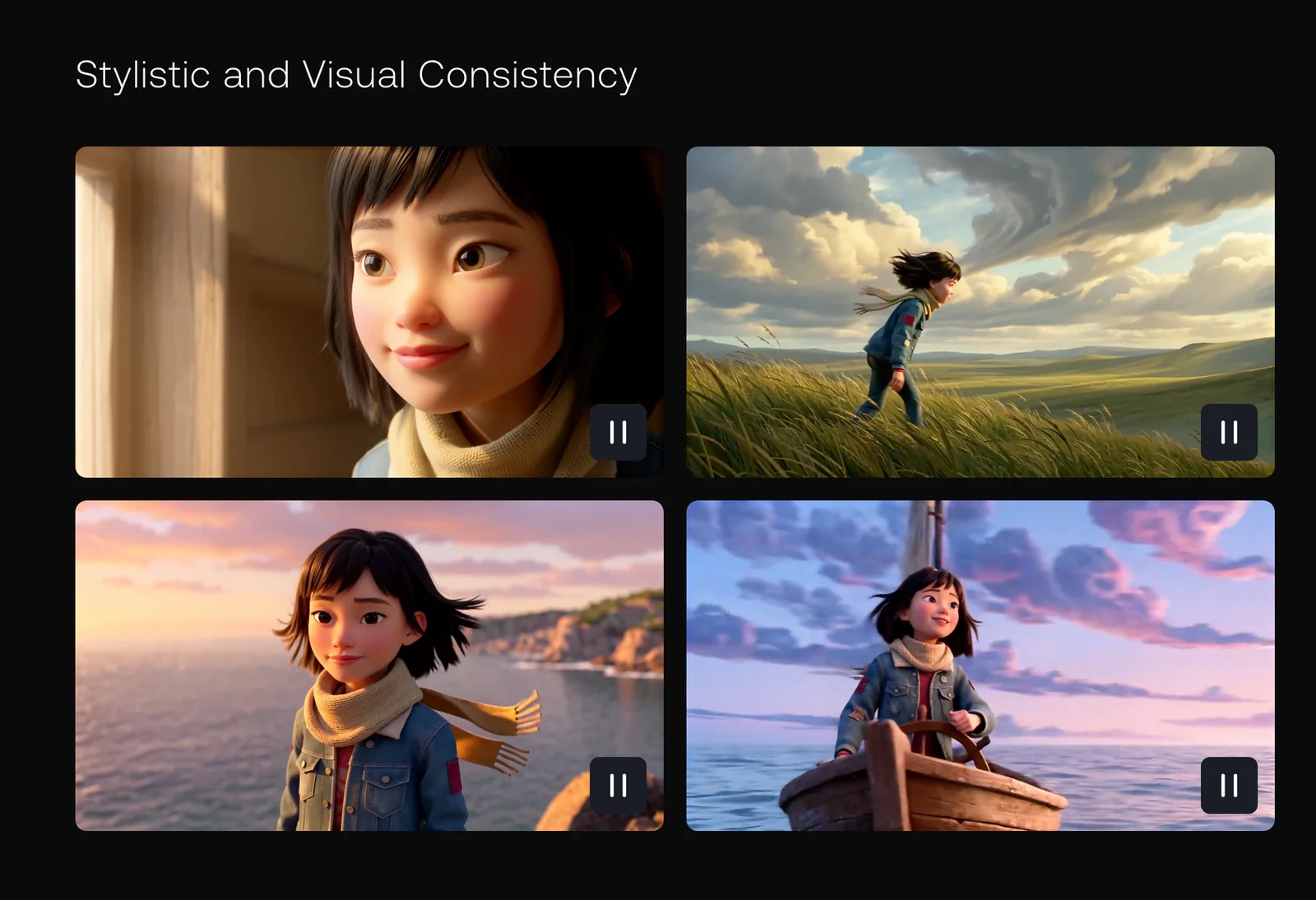

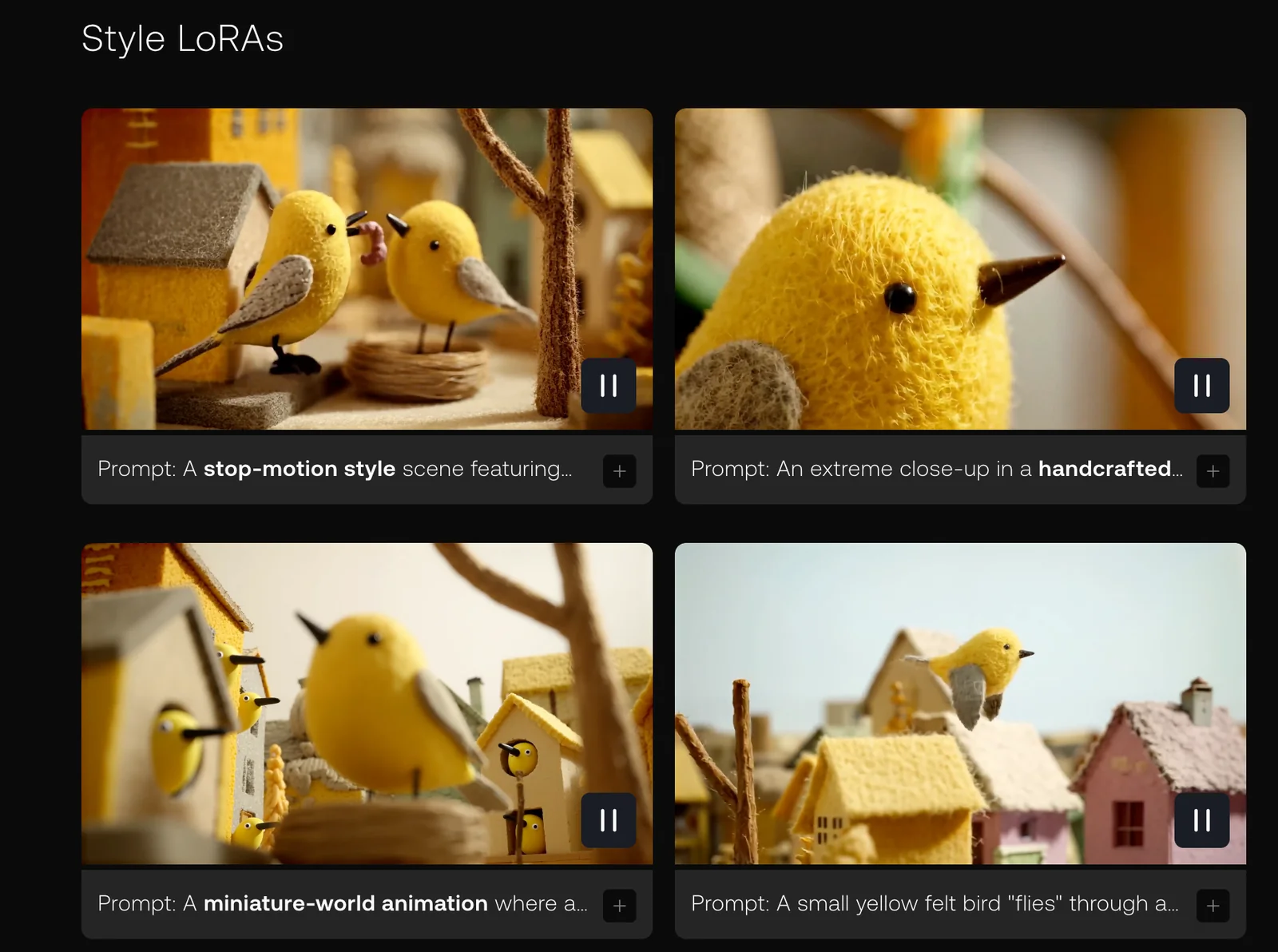

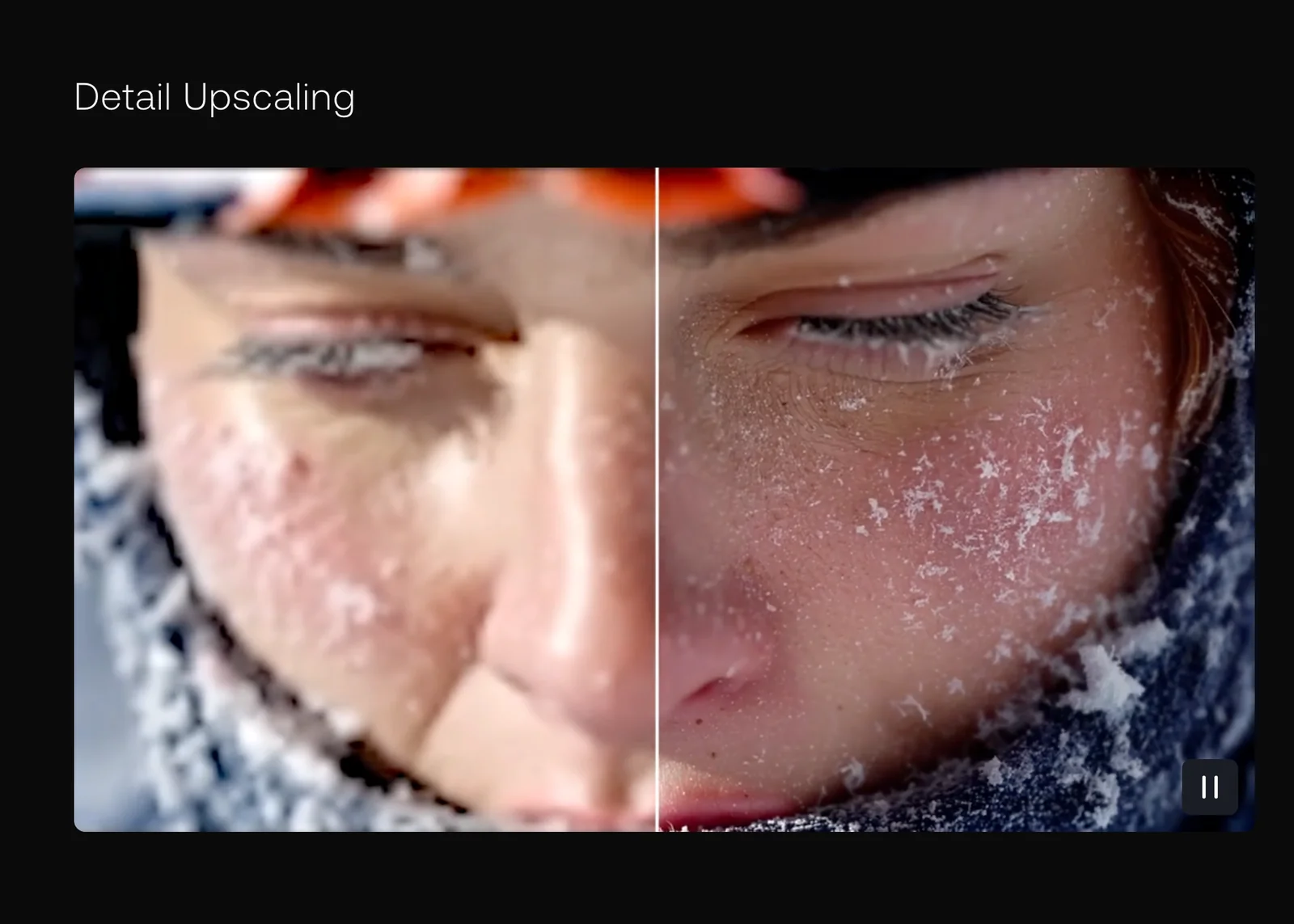

LTX-2 is a DiT-based audio‑video foundation model that generates synchronized audio and video in a single coherent process. It combines a video VAE, audio VAE and vocoder with transformer-based diffusion to produce high-fidelity outputs, multi-keyframe conditioning, and production-ready rendering pipelines. The project includes ltx-core (model & inference stack), ltx-pipelines (ready-to-run text→video, image→video, video→video and keyframe pipelines), and ltx-trainer (LoRA and full fine-tuning tools). Optimizations such as FP8 transformer support, gradient-estimation denoising, multi-stage (spatial + temporal) upscalers, and LoRA/IC‑LoRA training make it practical for research and production use cases that demand synchronized audiovisual outputs, long generations, and fine-grained creative control.

Screenshots