LMArena

AIFreeOpen platform for crowdsourced benchmarking and live leaderboards that ranks chatbots and LLMs using user votes and automated evaluations.

LMArena

Open platform for crowdsourced benchmarking and live leaderboards that ranks chatbots and LLMs using user votes and automated evaluations.

About LMArena

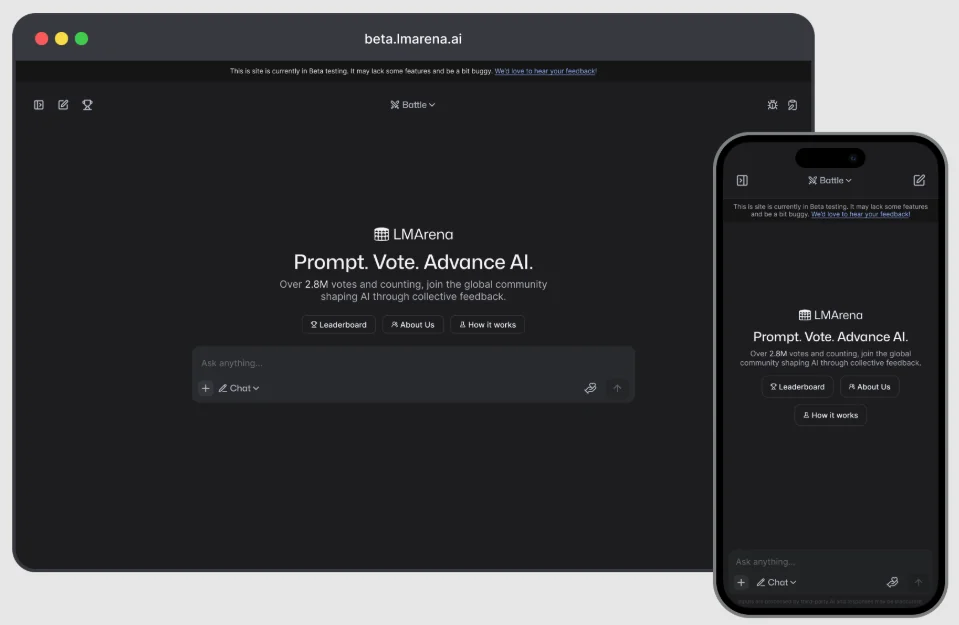

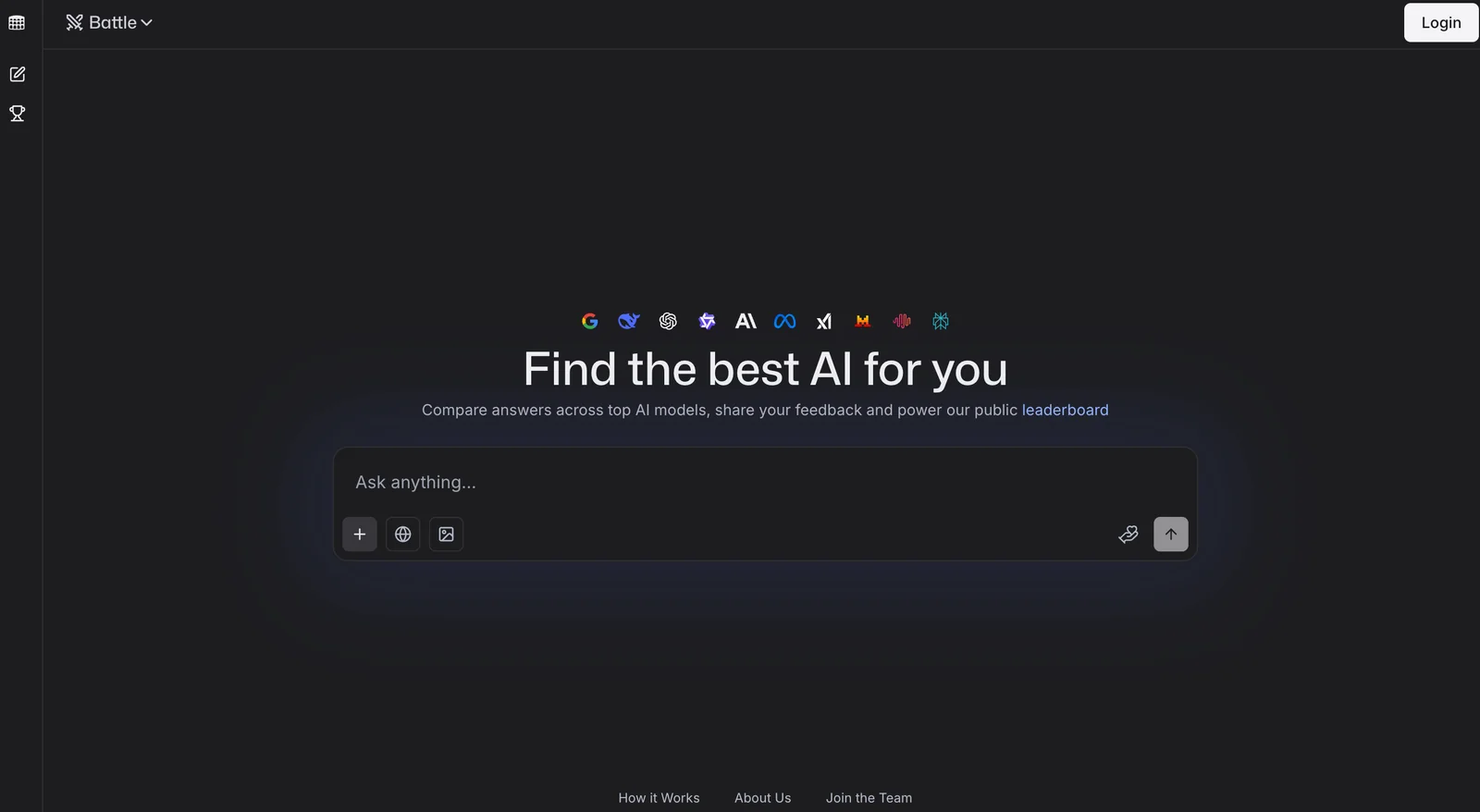

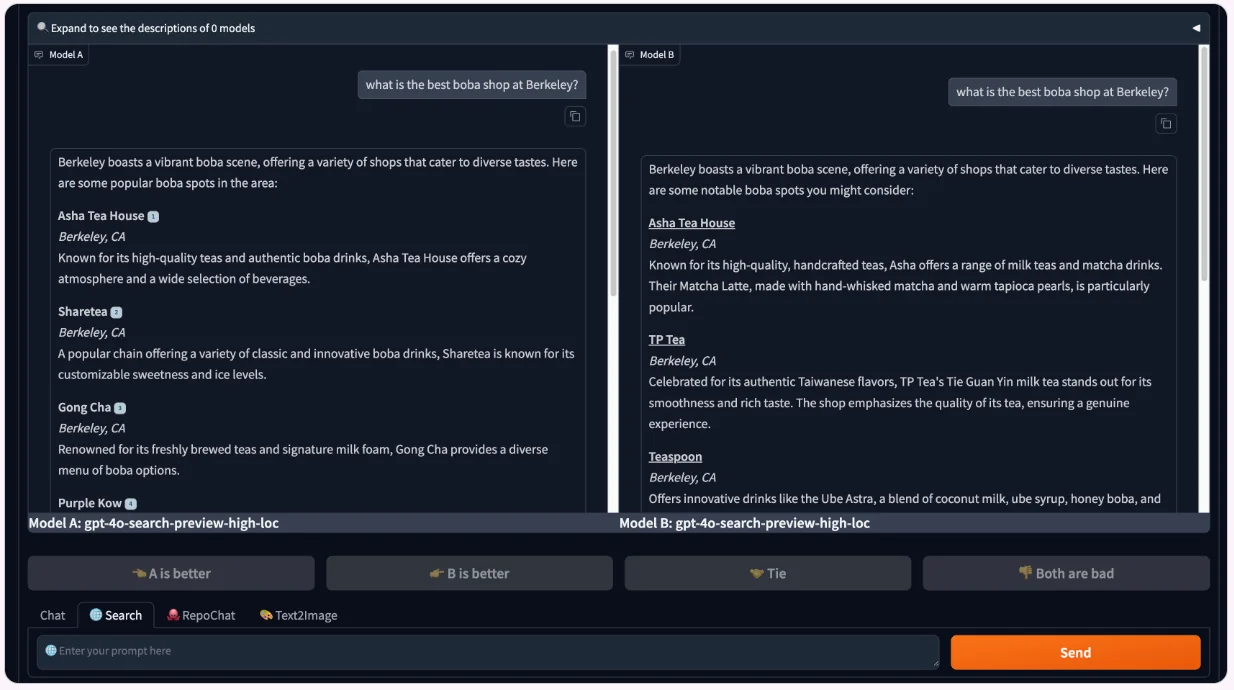

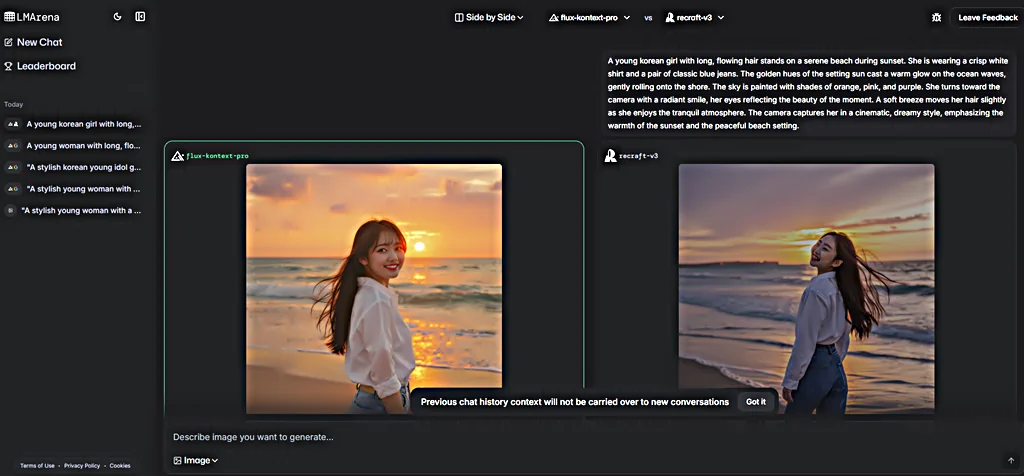

LMArena (Chatbot Arena) is an open platform for crowdsourced AI benchmarking that lets users interact with chatbots, cast pairwise votes, and view live leaderboards. It aggregates large-scale user comparisons and uses statistical models (e.g., Bradley–Terry) to compute win-rates and rankings, supplemented by automated evaluation suites like Arena-Hard-Auto. The project provides datasets, evaluation scripts, Hugging Face Spaces integrations, and model repositories to enable reproducible comparisons and pre-deployment testing. Its value lies in combining human preference votes, curated evaluation sets, and open tooling to produce community-driven, transparent measures of conversational model performance.

Screenshots